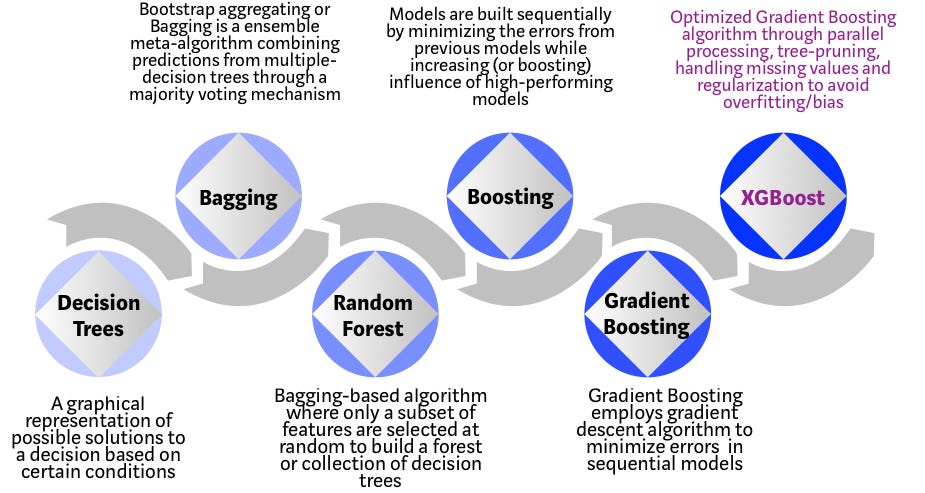

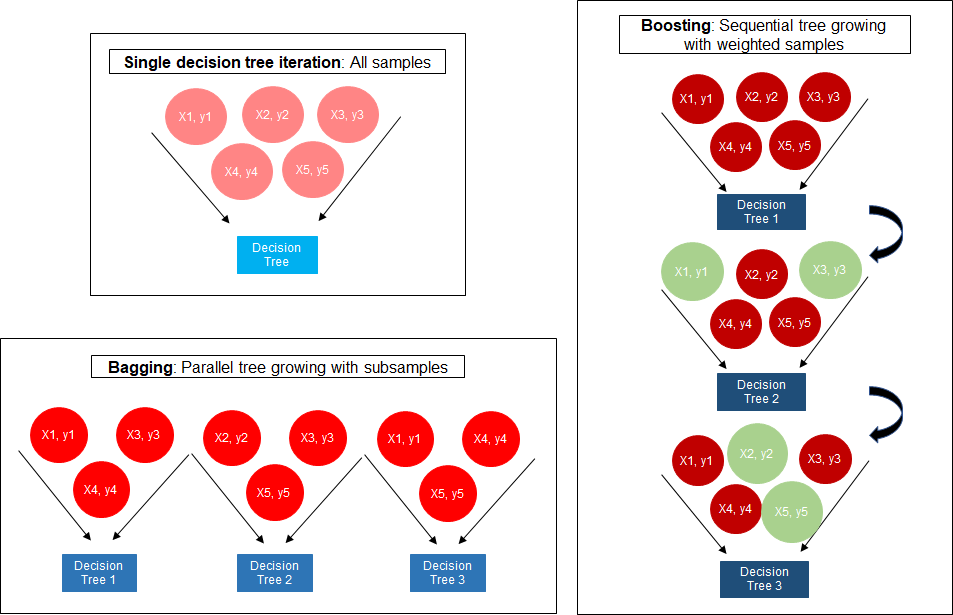

The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor. The extra randomisation parameter can be used to reduce the correlation between the trees as seen in the previous article the lesser the correlation among classifiers the better our ensemble of.

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities.

. In this algorithm decision trees are created in sequential form. Having used both XGBoosts speed is quite impressive and its performance is superior to sklearns GradientBoosting. Gradient boosted trees use regression trees or CART in a sequential learning process as weak learners.

AdaBoost is the original boosting algorithm developed by Freund and Schapire. Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. XGBoost delivers high performance as compared to Gradient Boosting.

The gradient boosted trees has been around for a while and there are a lot of materials on the topic. The training methods used by both algorithms is different. Gradient descent is an algorithm for finding a set of parameters that optimizes a loss function.

The mean length of stay in the ICU was 51 days and 595 patients died in the ICU which was 105 of the deviation dataset. A Gradient Boosting Machine. Theyre two different algorithms but there is some connection between them.

There is a technique called the Gradient Boosted Trees whose base learner is CART Classification and Regression Trees. I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog. Newton Boosting uses Newton-Raphson method of approximations which provides a direct route to the minima than gradient descent.

XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications. So whats the differences between Adaptive boosting and Gradient boosting. Given a loss function f x ϕ where x is an n-dimensional vector and ϕ is a set of parameters gradient descent operates by computing the gradient of f with respect to ϕ.

XGBoost is quite memory-efficient and can be parallelized I think sklearns cannot do so by default I dont know exactly about sklearns memory-efficiency but I am pretty confident it is below XGBoosts. XGBoost is more regularized form of Gradient Boosting. Both are boosting algorithms which means that they convert a set of weak learners into a single.

So having understood what is Boosting let us discuss the competition between the two popular boosting algorithms that is Light Gradient Boosting Machine and Extreme Gradient Boosting xgboost. AdaBoost is the shortcut for adaptive boosting. Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor.

XGBoost is more regularized form of Gradient Boosting. XGBoost trains specifically the gradient boost data and gradient boost decision trees. Originally published by Rohith Gandhi on May 5th 2018 41943 reads.

Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of gradient boosting. XGBoost eXtreme Gradient Boosting is an advanced implementation of gradient boosting algorithm. 2 And advanced regularization L1 L2 which improves model generalization.

Over the years gradient boosting has found applications across various technical fields. The lesser the correlation among classifiers the better our ensemble of classifiers will turn out. It is a decision-tree-based ensemble Machine Learning algorithm that uses a gradient boosting framework.

AdaBoost Adaptive Boosting AdaBoost works on improving the. XGBoost is an implementation of the GBM you can configure in the GBM for what base learner to be used. The different types of boosting algorithms are.

Gradient boosted trees consider the special case where the simple model h is a decision tree. Along with What is CatBoost model. GBM is an algorithm and you can find the details in Greedy Function Approximation.

It can be a tree or stump or other models even linear model. Its training is very fast and can be parallelized distributed across clusters. Answer 1 of 2.

In this case there are going to be. AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. The differences of categorical variables between groups were tested with a Chi-squared test.

While regular gradient boosting uses the loss function of our base model eg. Difference between xgboost and gradient boosting Written By melson Friday March 18 2022 Add Comment Edit As such XGBoost is an algorithm an open-source project and a Python library. XGBoost delivers high performance as compared to Gradient Boosting.

These algorithms yield the best results in a lot of competitions and hackathons hosted on multiple platforms. It then descends the gradient by nudging the. It worked but wasnt that efficient.

XGBoost is basically designed to enhance the performance and speed of a Machine Learning model. XGBoost XGBoost is an implementation of Gradient Boosted decision trees. Boosting is a method of converting a set of weak learners into strong learners.

Decision tree as a proxy for minimizing the error of the overall model XGBoost uses the 2nd order derivative as an approximation. XGBoost was developed to increase speed and performance while introducing regularization parameters to reduce overfitting. Gradient Boosting was developed as a generalization of AdaBoost by observing that what AdaBoost was doing was a gradient search in decision tree space aga.

Its training is very fast and can be parallelized distributed across clusters. We can use XGBoost to train the Random Forest algorithm if it has high gradient data or we can use Random Forest algorithm to train XGBoost for its specific decision trees. Here is an example of using a linear model as base learning in XGBoost.

XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. XGBoost computes second-order gradients ie. Extreme gradient boosting XGBoost.

Visually this diagram is taken from XGBoosts documentation. XGBoost models majorly dominate in many Kaggle Competitions. Answer 1 of 10.

Share Improve this answer.

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

Comparison Between Adaboosting Versus Gradient Boosting Numerical Computing With Python

Deciding On How To Boost Your Decision Trees By Stephanie Bourdeau Medium

Mesin Belajar Xgboost Algorithm Long May She Reign

Boosting Algorithm Adaboost And Xgboost

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

0 comments

Post a Comment